Prof. Chris Harrison of CMU visited the DGP to talk about his work in mobile sensing.

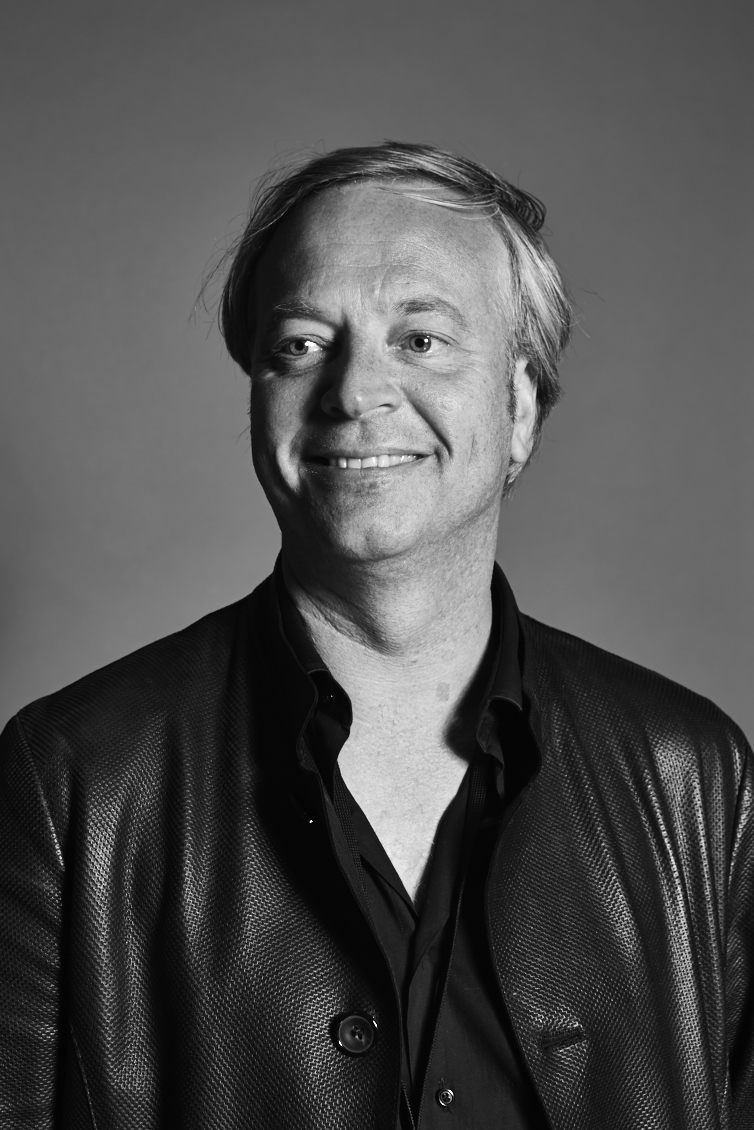

BIO

Chris is an Assistant Professor of Human-Computer Interaction at Carnegie Mellon University. He broadly investigates novel sensing technologies and interaction techniques, especially those that empower people to interact with small devices in big ways. Harrison has been named a top 30 scientist under 30 by Forbes, a top 35 innovator under 35 by MIT Technology Review, a Young Scientist by the World Economic Forum, and one of six innovators to watch by Smithsonian. He has been awarded fellowships by Google, Qualcomm, Microsoft Research and the Packard Foundation. He is also the CTO of Qeexo, a touchscreen technology startup. When not in the lab, Chris can be found welding sculptures, visiting remote corners of the globe, and restoring his old house.

TITLE

Interacting with Small Devices in Big Ways.

ABSTRACT

Eight years ago, multi-touch devices went mainstream, and changed our field, the industry and our lives. In that time, mobile devices have gotten much more capable, yet the core user experience has evolved little. Contemporary touch gestures rely on poking screens with different numbers of fingers: one-finger tap, two-finger pinch, three-finger swipe and so on. We often label these as natural interactions, yet the only place I perform these gestures is on my touchscreen device. We are also too quick to blame the fat finger problem for much of our touch interface woes – if a zipper or pen were too small to use, we would simply call that bad design. Fortunately, our fingers and hands are amazing, and with good technology and design, we can elevate touch interaction to new heights. I believe the era of multi-touch is coming to a close, and that we are on the eve of an exciting new age of rich-touch devices and experiences.