A Physically-Based Drawing Program Prototype

This is a drawing program whose goal was to mimic a physical desktop. It was a project for a Topics in Interactive Computing course (CSC 2524) taught by Ravin Balakrishnan.

The inspiration for this project came from a number of sources. Artists in the real world use both of their hands to manipulate the paper and/or the tools they use to draw. This is a stronger direct manipulation paradigm than the typical point-and-click interface. Dr. Hiroshi Ishii's work on tangible user interfaces suggests using everyday objects as computing interfaces. On the other hand, multi-hand and multi-touch interfaces, such as in Digital Tape Drawing (publication #9) or MERL's DiamondTouch, allow the computer to interpret manipulation of a virtual object at more than a single location at a time. This, coupled with the simulation of the physical desktop on the computer (see BumpTop), translates into a compelling metaphor for drawing on the computer.

To implement physxDraw, I needed both a multi-touch hardware device and some physics simulation software. Luckily, our lab is equipped with a DiamondTouch surface. It was easy to hook up a projector to display directly on the surface, completing the direct manipulation metaphor.

The physics library came from Ageia, which is now NVidia PhysX. It's a multithreaded physics library designed for games and it has hardware and software support! It took a little while to get used to, but it runs really well.

Of course, I also used OpenGL and glut. Since the frame rate of the DiamondTouch surface and the graphics were different, I moved the DiamondTouch input processing to a separate thread to improve performance.

The goal of physxDraw was to provide physical tools within the computer for the user to draw with. As such, the basic interface provides a pencil, and eraser, and a ruler. The pencil and eraser could be used as the usual computer tools, but in order to draw straight lines, the user had to manipulate both the pencil and the ruler tool using both hands--just like the real world.

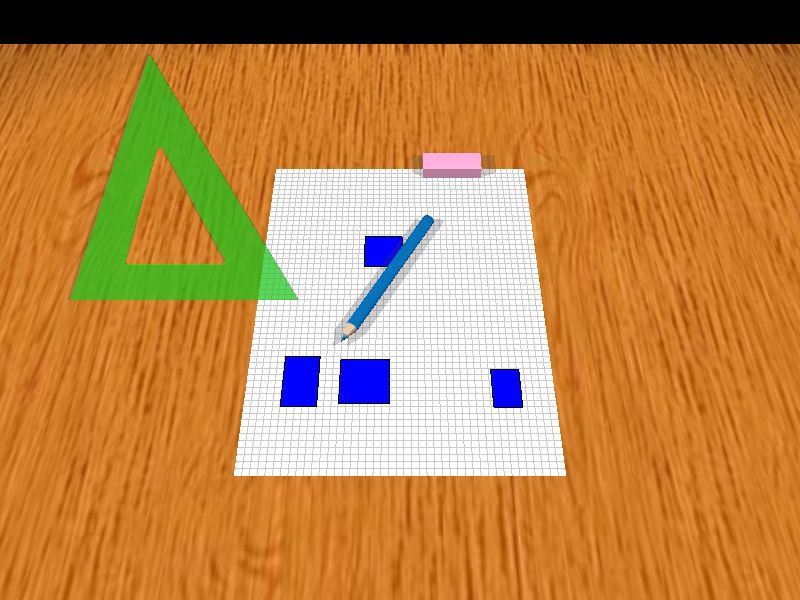

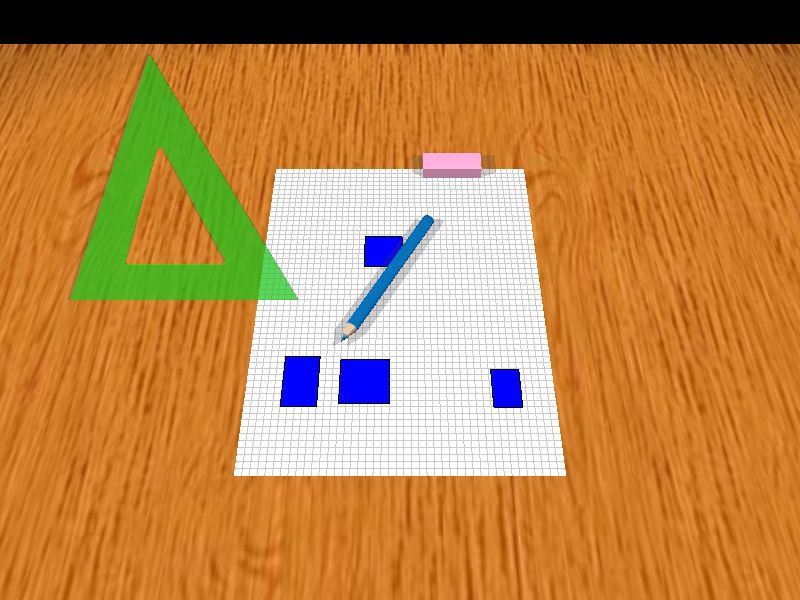

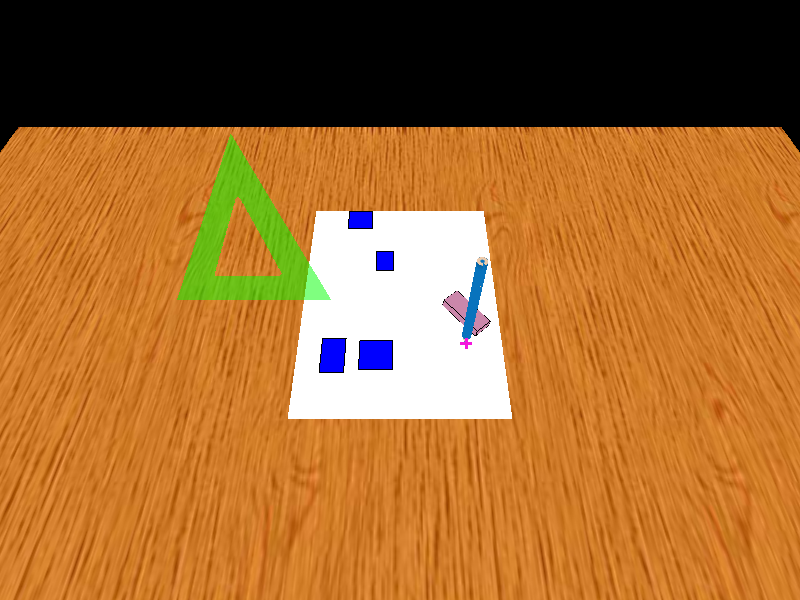

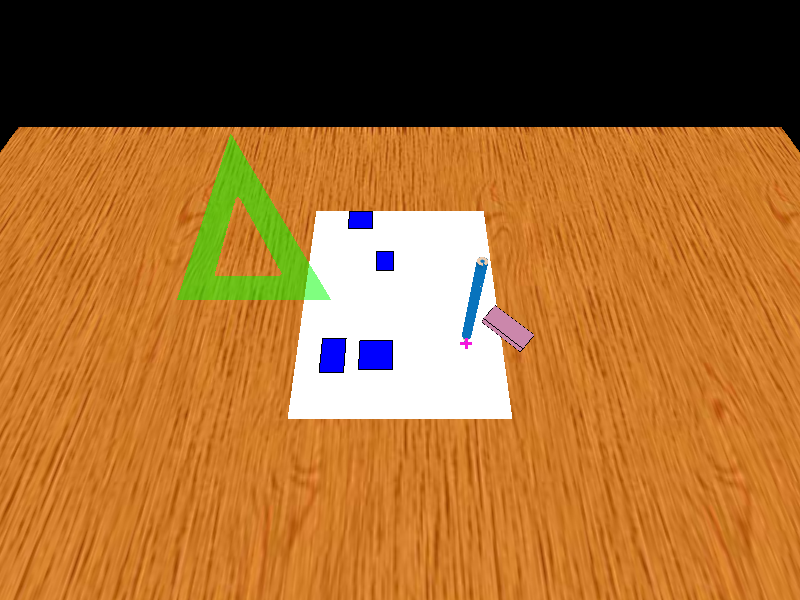

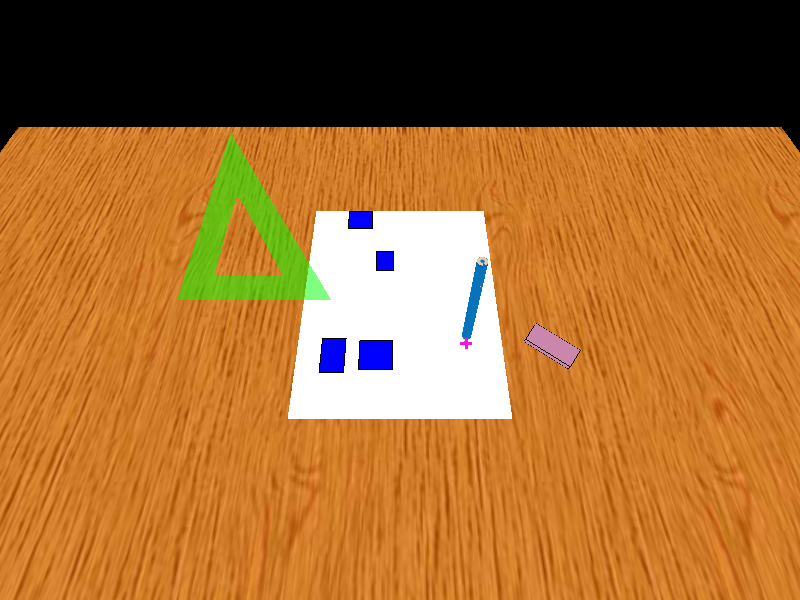

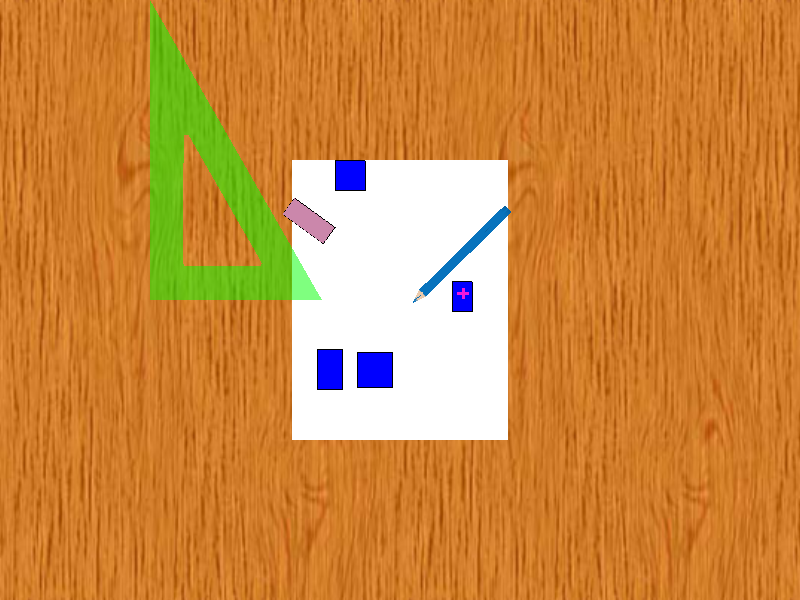

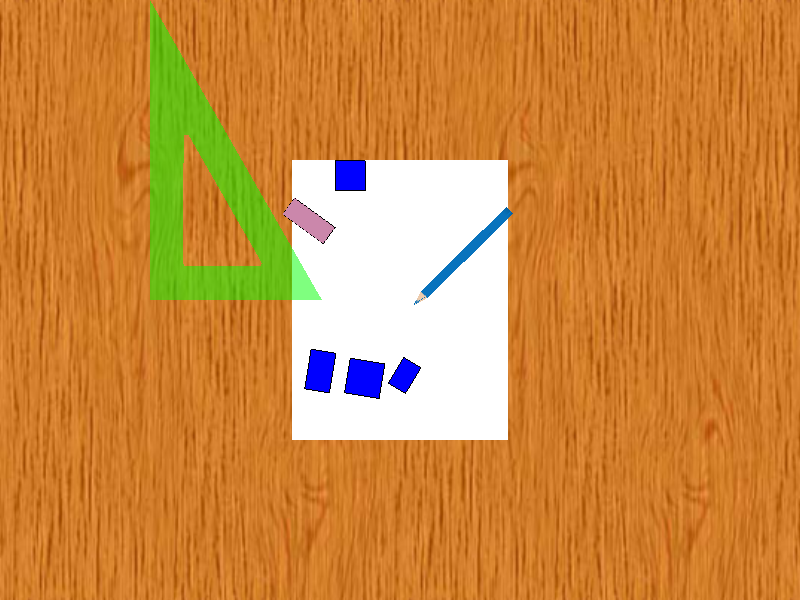

I provided two cameras for the user to visualize the scene, shown below. The first one is the typical orthographic view of most computer drawing programs. However, there is also a perspective view that mimics the view of a person actually sitting at his or her desk. These images are from an early version of the program, which had some very basic drawing code without lighting or shadows.

Orthographic camera |

Perspective camera |

If you have ever programmed a multi-touch interface, you know that it is very different from the usual mouse pointer programming. For example, using the mouse, you always know if the position of the mouse whether the buttons are pressed or not. However, with a multitouch system, you only get the press events, so you do not know where the user's fingers are before the touch events.

Additionally, since the the user is touching and dragging his fingers in more than one place, it becomes important to be able to track each of these "trajectories" so that touch events are routed correctly. This was especially challenging with the DiamondTouch surface, since it provides a list of the x-coordinates and a list of y-coordinates where the surface is pressed. It is up to you to piece this information to compute the touch locations.

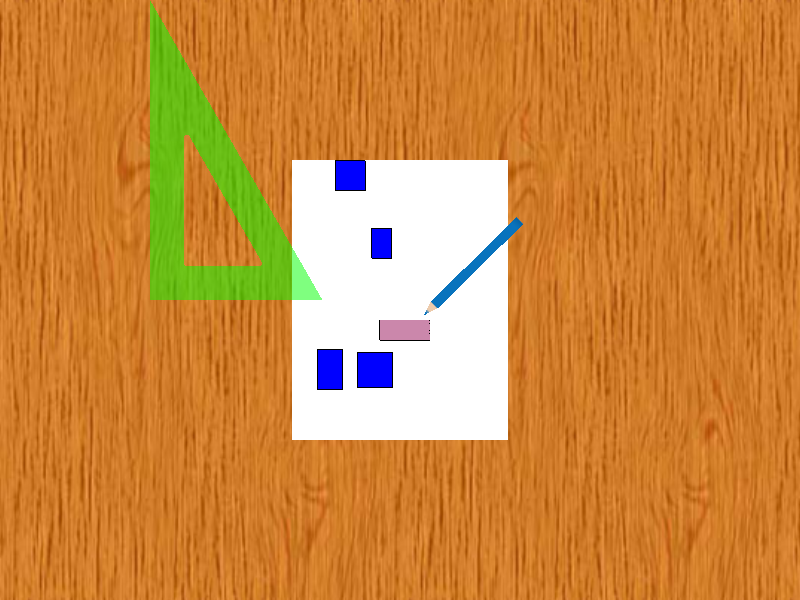

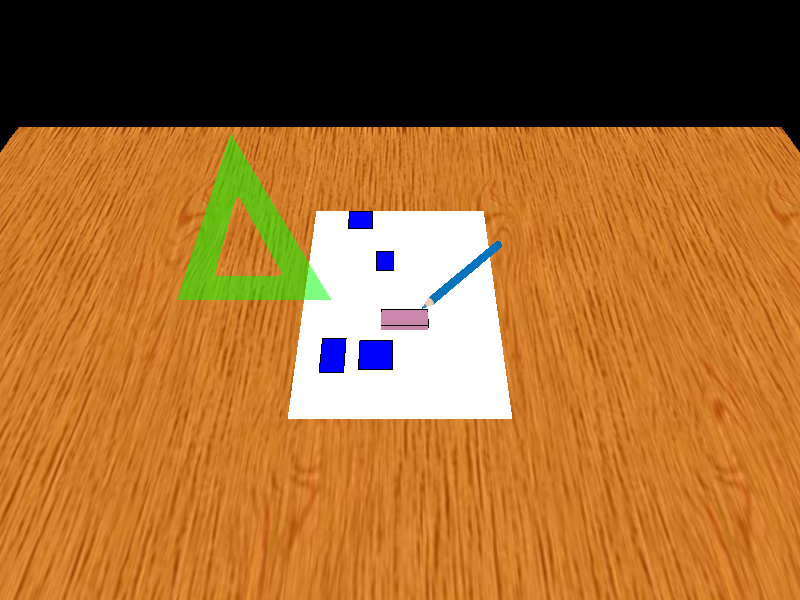

To move one of the tools, the user must simply press his or her finger on the appropriate tool and drag it. Note that the other tools will be pushed aside by this action. The images below show the user dragging the eraser, which pushes the pencil to the side.

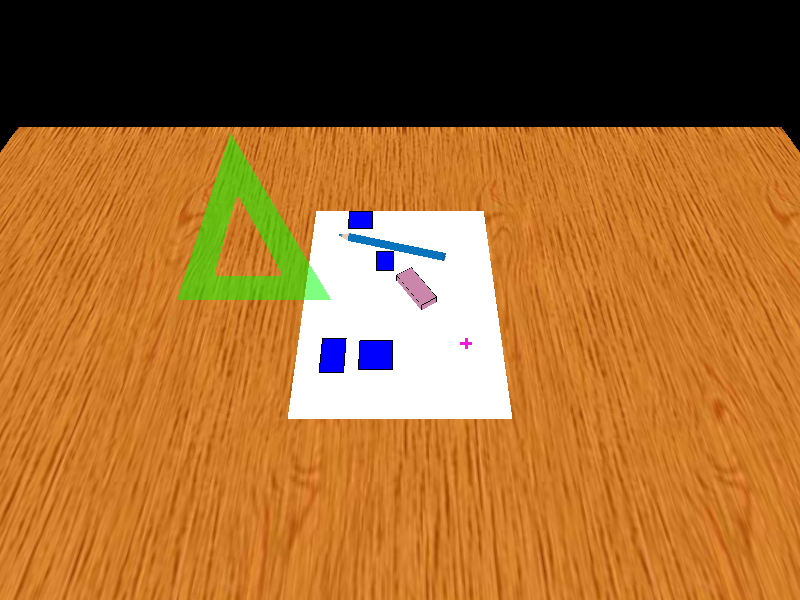

Moving a tool to the side is different than actually using the tool, so we need another gesture for this second choice of action. Using the tool actually requires two steps. First, we must select the tool to use. This is done by touching the tool without moving your finger. After a short timeout, the tool will change color to show that it is active (old drawing code).

|

|

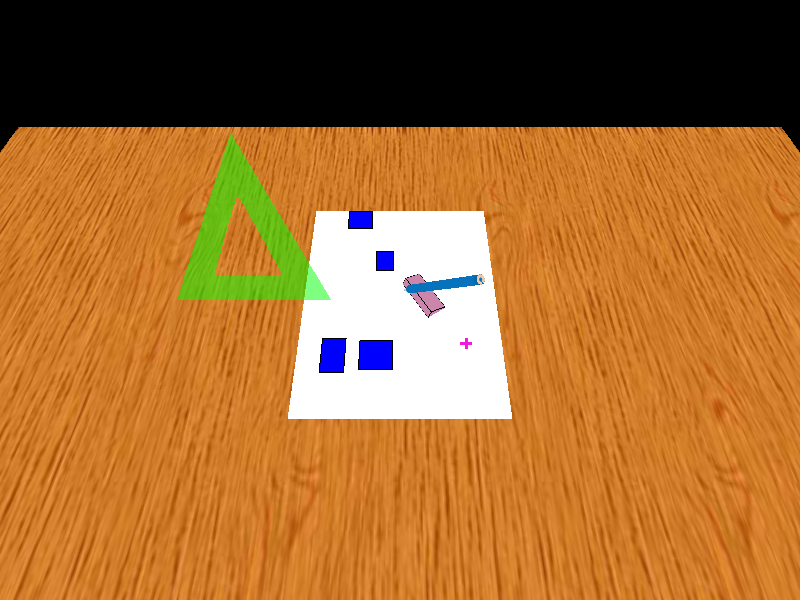

Next, the user presses on the part of the paper where the tools will be used. After a short timeout, the tool moves to this location and is ready to be used, as seen below (old drawing code). The "X" marks the location where the user put his or her finger. Note again how the eraser is pushed out of the way.

|

|

|

|

|

Here I got a little creative. Since we can break real-world physics when using the computer, I decided it would be interesting to explore what happens when the objects you draw automatically become physical objects as well. Therefore, I made all the drawable objects physical too. They only collide with each other, however, and cannot collide with the drawing tools. The images below show one such interaction (old drawing code).

|

|

|

|

|

|

These objects also follow the interaction paradigms described above. They are moved by pressing and dragging, but in order to select one to, say, change its color, one must press and hold until the object determines it is selected.

The interactions I have described above are what all that I managed to implement on this prototype. Some of the issues that I was not able to overcome were the following.

The DiamondTouch driver was somewhat buggy. Any time there was a second touch to the screen, the driver would report it only on one axis in the first frame where it appeared, and then on both axes the next frame. This really screwed up the trajectory tracker, which assumed you would see this new touch on both axes immediately. Thus, the trajectories were totally screwed up.

One thing I would have liked to spend more time on was the interaction between the pencil and the ruler when drawing (currently, no drawing code is implemented). In order to place the pencil at the location it will be used in, and to follow the user's finger accurately, I turn off the physics and make the pencil push any object in its way. Therefore, even holding the ruler in place with the other hand, I'm not sure how to create or detect the collision between the two tools to draw a straight line. Also, it becomes hard to place the pencil at the right spot to draw the line initially, so the user may draw lots of lead in paths before arriving at the ruler.

Finally, it became obvious that using your finger to draw precise lines was not necessarily a good paradigm for interaction, so we then thought of tracking a real pen or pencil using motion capture cameras. To me, this killed the spirit of physxDraw, so I did not pursue it further.

I had a blast putting physxDraw together, and I learned quite a bit of OpenGL and PhysX in the process. Unfortunately, the hardware, software, and touch interfaces did not really meld well together.

However, all is not lost. There are other ways to bring physics into a drawing program, even if it is not using a multitouch interface. You can draw curves that have different stiffnesses, so that some curves will more readily change their shape than others. Also, you could try drawing objects under the influence of different force fields, such as a "gravity" field or a "wind" field within a region of the paper. These are ideas to be explored in the future.