Redesigning AR

A redesign of interactions for AR

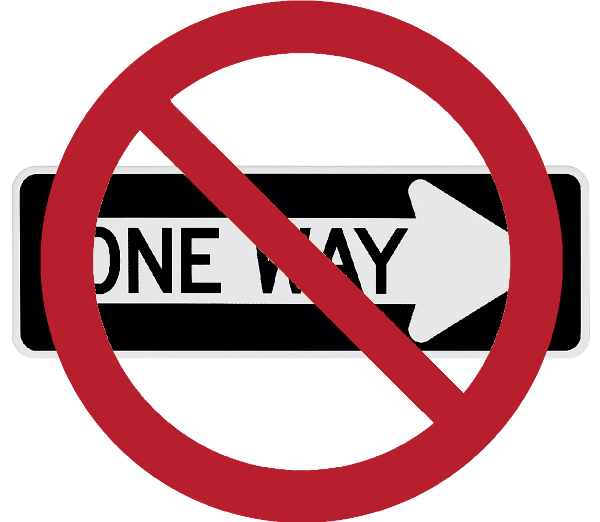

There’s no easy solution to this issue. Having been exposed to GUI toolkits our whole computer interactive lives, we have a tendency to slip into treating the world with these familiar constructs. Therefore, throughout this project and the ones leading up to it, there was a constant need to challenge solutions, to question if they were in fact approached the right way, were slipping back into old habits, or were purposely obtuse in an effort not to be what was familiar. There’s a fine line between all three of these, but that’s what makes this project challenging.

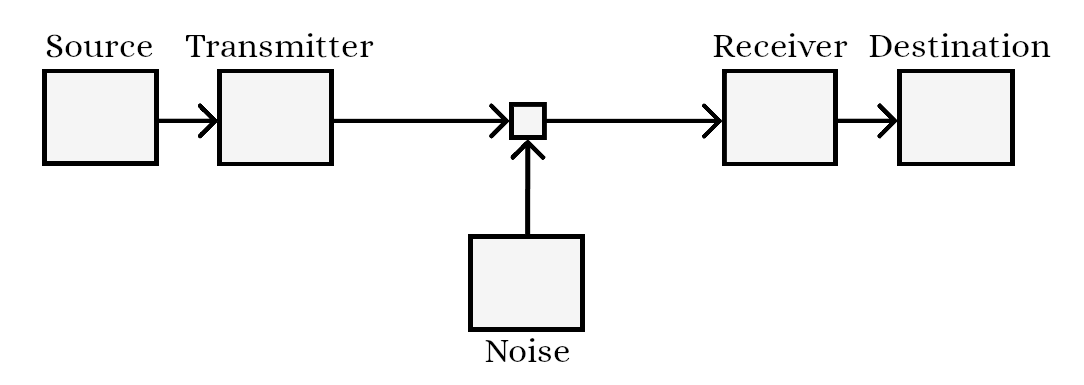

In the end, it was necessary to re-examine the whole interaction pipeline, from hardware all the way through to the user interaction.

During the initial exploration phase of this project, it very quickly became apparent that one can’t just look at the interactions of an AR system, as interactions are largely dependent on the hardware that supports them. Further, much of the hardware that will be used in the future does not yet exist, nor do many of the AI models that will support the systems. So, this raises an interesting question of “how does one design and develop interactions for a system and hardware that don’t yet exist?”

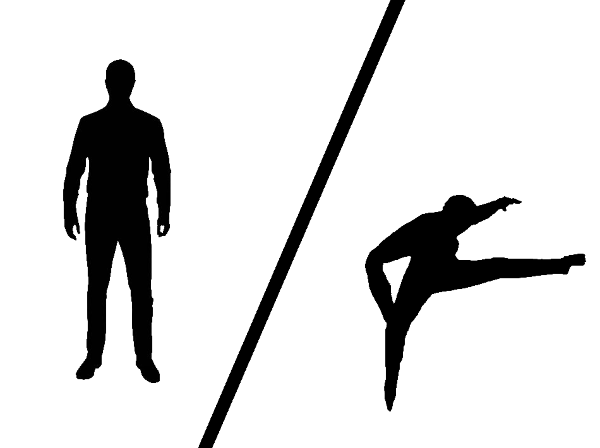

As the early work showed, there’s yet another issue as well. We’re used to working on desktops which are controlled environments. We’re used to having control of the layout, the placement, the size, etc. However, when designing for the real world, most of these controls no longer exist. So, we’re left with 3 fundamental design challenges:

Previous research by Mark Weiser and his team at Xerox Park suggested that future interactions should be truly embedded and ubiquitous. What does this mean? Ultimately, it means that we want an interface that is effortless, seamless, and doesn’t distract us from our surroundings (i.e. fits in with what we’re doing).

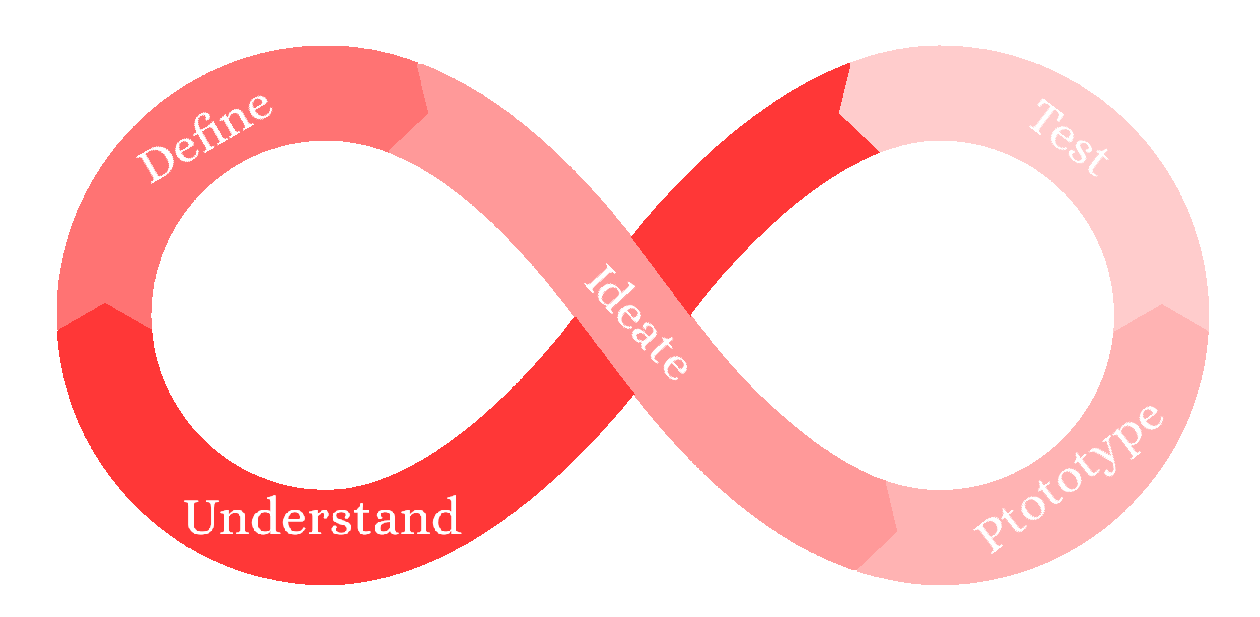

With this principle in mind, my approach was to work backwards and use a funnel method. To start, I threw out everything I knew about interactions. There were no constraints, no expectations, no set processes. In a previous project, we started looking at scenarios using the design thinking process - given a couple of different scenarios, how would we want AR to work? Again, we could do anything, there was no need to be realistic. We refined and redesigned these ideas multiple times to try and find new ways to interact. From there, I was able to start looking for trends, needs, problems, similarities, logistics, etc.

To start, some of the key realizations I had included:

This approach and working first from scenarios, allows one to think about the experience as a whole instead of the individual actions (which is common when designing interfaces). Another factor when thinking about the whole pipeline in this manner is that the developer becomes an end user as well. It’s possible to envision the greatest idea; but if it’s impossible to develop, it becomes an exercise in futility.

Though re-imagining traditional interfaces, it doesn’t mean that one shouldn’t consider existing techniques; after all, each interaction element on a computer is accomplishing a conceptual task. If we take the button as an example, a button is used as a method to “acknowledge” or “confirm” a suggestion or decision. To do this, it requires one to use a finger or hand to “tap”/“push” the button.

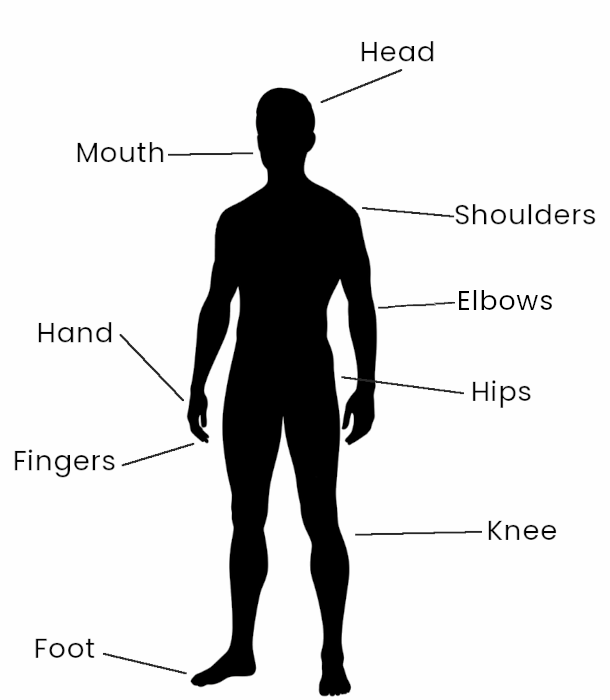

In the real world, your hands may not be free. This doesn’t mean you can’t acknowledge the task, but that you can use other methods. Thinking about the human body, one could easily acknowledge using a grunt, a specific word, a nod or raising of the head, a flick or tap of a finger or hand, a tap of the foot, a swing of the hip...in reality it depends on what parts of your body are free; about how well you can move; whether you have any physical impairments; about your environment (i.e. are you in the middle of a meeting, on the subway, in your home, in a park); and what surfaces are available. These are all things that need to be factored in when simply creating the best way to acknowledge a situation. One can see that designing a system can, very quickly, get very complicated.

This leads to one of the many realizations from this project, which is that developers are unlikely to write a whole program from beginning to end, but rather focus on individual tasks, and that applications as we know them today, will be the accumulation of many of these different tasks.

This project goes way beyond the scope of a simple portfolio, but to give a sense of some of the work, I’m outlining an example, and a partial solution to this scenario.

The scenario is that you’ve woken up in the morning and put on your AR glasses. We will be examining a process that tries to remind you to bring your umbrella as you leave for work, as it might rain today.

The simplest approach to solving this problem would be to let you get ready in the morning, and as you’re about to leave, project a notice on the door saying “Don’t forget your umbrella!”.

This approach potentially would work, but what would it look like if we used a cell phone instead?

Using your cell phone, you’d get ready in the morning, and as you were about to leave, your phone would buzz, and by looking at your phone, or possibly your watch, you would get a notice saying “Don’t forget your umbrella!” This is almost the exact same thing! So, is AR worth it, or is the cell phone good enough?

If using AR isn’t much better than using a cell phone, why use AR?

With the initial exploration, this was a problem we came across time and again. I finally realized, that the key to interacting with AR is about the workflow. Using AR, we can improve the user’s workflow, speed up their tasks, and make their efforts more efficient.

What does this example look like, applied with this mindset in AR?

With AR, one of the main differences is that it can help you while you’re doing other things. So, like before, you put on your AR glasses, and perhaps (based on your preferences) it reminds you right away “It’s supposed to rain today...you probably want to bring your umbrella”. As you go about your morning routine, if your umbrella isn’t by the front door, it might notify you when you’re closest to it, saying “hey, your umbrella’s over here, do you want to grab it now?”

When you finally get to the front door, if you don’t have your umbrella, it could do a notification on the door saying “Don’t forget your umbrella”, but it could also provide a haptic response in the direction of your umbrella, and possibly a visual cue to suggest you look in the direction of your umbrella. When the umbrella is within your visual range, it may highlight the umbrella to help you quickly identify it amidst everything else around it.

If you look at what I just wrote, you’ll notice qualifiers such as “perhaps”, “it might/may”, and “it could”. This identifies another big difference with an AR approach. On desktops things are absolute (or digital). Things happen or they don’t. In the real world, things are less certain, and as a result decisions need to be based more on a continuum (or more analog). Regardless, this highlights another way in which we need to change how we think about the problem.

To break this problem down into user flows, we need to separate the problem into individual tasks that need to be considered. To start, let’s look at the process of making sure the umbrella is by the door.

First is the obvious question, “do you even have an umbrella”? Because if not, we can shut down the whole process.

Assuming you do have one, there’s the question of if the umbrella is already by the front door. If it is, this task is complete.

If not, we might consider whether you pass the umbrella on during your morning routine. If so, you may want to suggest to the user to grab it on the way by, otherwise you may want to suggest that you can lead them to get the umbrella on the way to the door.

It’s worth noting that both getting the user to grab the umbrella and to lead the user to get the umbrella are separate tasks, so would require separate flows, considerations, and interactions.

A task that could be part of the previous task, or could be an accompanying task is to monitor if you’re by the front door, and respond accordingly. In this case, we need to see if you’re by the front door, and then check if the umbrella is there as well. If the umbrella isn’t present, the system can trigger the task to lead the user to the umbrella; however, if the umbrella is present, the system can go through the process of reminding you to take your umbrella.

As a possible implementation for reminding the user to bring their umbrella, we conceivably could break the problem into the following tasks:

Display a notification by the door

Display a notification by the door

Highlight the umbrella

Highlight the umbrella

Audio cue in the direction of the umbrella

Audio cue in the direction of the umbrella

Haptic cue in the direction of the umbrella

Haptic cue in the direction of the umbrella

Though this seems simple enough, there are no standards for AR, and the situation itself needs to be considered. Therefore, these tasks need to be broken down further still. We need to ask further questions about each task.

As you can see, there are many different things to think about just for a fairly simple task. It’s possible in the future, some of these factors will be standardized and as designers, we won’t need to think about it; however, in the short term, these are all things to think about.

Though these ideas were never developed into a product, these initial expirations yielded many insights and observations. Some of these have already been listed, but I will include them here again for completeness.

Some of the key realizations/discoveries are:

These are just some of the realizations, and each realization likely lends itself to further exploration. But that will have to wait for later projects.

This project was a phenomenal opportunity to explore an unknown and largely unexplored design space. It was also probably one of the hardest projects in which I’ve endeavored, in that there were no clear end goals, no clear constraints, and no clear direction. It was an amazing example of absolute ambiguity.

That being said, this was also a great opportunity to work through ambiguity, about finding constraints, direction, and self-defined goals; of figuring out ways to notice progress, and see clarity come to areas that were otherwise unknown.

This was also an interesting project in that most projects (especially in academia and research) are focused on small problem spaces, with very narrow breadth, creating small increments to the known material. This project required the opposite approach, where it required a perspective of the whole system. From there, it was possible to work down, re-imagining (roughly at first), then further refine - sculpting the space, much the way an artist might create a marble statue.

Another point that became very clear throughout this project, was that it was difficult to show and communicate progress on something this large and ambiguous, as most people external to the project didn’t have the knowledge or context to understand all the findings and definitions being discovered. It is obviously easier to communicate on smaller, more clearly defined efforts, and to refine specifics rather than generalizations.

Regardless, this project was brought to a state that smaller scoped projects will now be easier to define and pursue, adding to this rich and exciting future interaction space.