Approach to Software Creation

I've designed and developed several large software frameworks for web-based artificial agents, computer animation, digital human modeling and robotics research. I tend to use object-oriented design with an eye to future extensibility and modularity, often employing dynamic plug-in component models. I've also written class libraries to allow functionality to be easily incorporated in other people's code. The following are some of the software I'm either working on or have worked on that were used for my own research and for commercial products. Most of my software is written in C++. I'm a big fan of the Qt Toolkit. |

|

MOVE-IT:

Monitoring, Operating, Visualizing, Editing Integration Tool (2008-current)

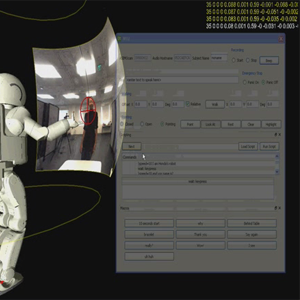

My software, MOVE-IT (Monitoring, Operating, Visualization, Editing Integration Tool) is part of an ongoing research project to develop intuitive easy software to allow visualization and operation of all the complex systems running as part of a distributed network of modules that control our robot's motor, perceptual and decision-making systems.

Features

I use Qt and make heavy use of QtQuick, QtScript, QtThread and the Graphics View framework with heavy OpenGL integration. This software if very customizable and can be used as a platform for assembling and configuration a variety of complex systems just by editing an XML configuration file. Different computational modules can be seamlessly integrated and mixed like a mash-up to create very different customized applications using the same computational engine. Visually, Qt's tight integration with OpenGL allows applications to have very simple interfaces, free of GUI clutter (e.g., window frames, buttons) at the same time accommodating GUI elements when needed. I use QtScript to allow Qt signal-slot connections to be specified in XML and run-time interpreted to create the connections as the XML is parsed to configure my applications. QtQuick is used to allow QML to specify user-interface controls as needed. The useful libqxt library is used to allow multiple instances of MOVE-IT engines to communicate with each other over a distributed system of several computers if needed.

Various modules I wrote in the MOVE-IT framework feature live graphing of data, live and pre-recorded video playback, natural user interfaces using Kinect, and mult-modal processing such as combining audio localization with face detection.

|

|

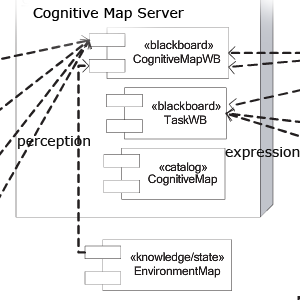

The Cognitive Map Architecture:

(2008-2009 at the Honda Research Institute USA)

This was the product of a critical collaboration (read about it here) with Thor List and Kristinn R. Thorisson. We took their excellent software that features a publish-subscribe, time-sensative message-based blackboard architecture and added our own abstraction layers and generic, extensible serializable object model to provide the critical integration for a real-time, interactive robot. This architecture is our main middleware we use to connect a variety of different perceptual, cognitive and behavioral components into an intelligent distributed system that can be used for robots, smart rooms or any interactive system, either physical or virtual.

|

|

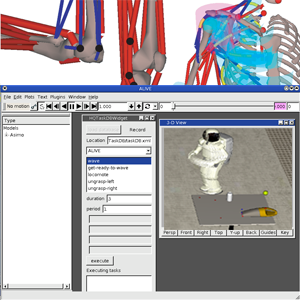

ALIVE:

Anthropometric Locomotion in Virtual Environments

(2001-2007 at the Honda Research Institute USA)

ALIVE was my main research testbed for projects at the Honda Research Institute USA (see research page). I'm constantly adding to its functionality and use it to put together projects. Originally, it was used for my work in Digital Human Modeling but has now been extended to work with humanoid robot task modeling.

Features

This software consists of a comprehensive class library and Qt-based application framework for handling many aspects of biomechanical simulation, including articulated joint models, mass property computation, forward dynamics simulation, and visualization with computer graphics. An XML-based file format describes the physical scene and models in the environment. This software has been used to model and analyze dinosaurs, elephants, humans and humanoid robots. A flexible plug-in architecture provides extensibility for file format input/output, new geometry models and interactive applications. For example, plug-ins have been written for inter-agent socket communication, motion capture integration, pushing motion planners for robots, and subject-specific skeleton fitting via optimization.

A wide variety of data import/export is supported, including C3D, Wavefront OBJ, Fbx, bvh, VRML file formats and more. The software is multi-platform and is being run actively on Windows XP, Linux and MacOS platforms.

|

|

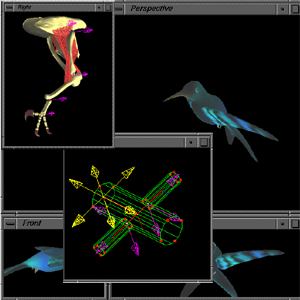

DANCE:

Dynamic Animation and Control Environment

(1994-2000 at University of Toronto,

now maintained at UCLA)

During my PhD thesis at the University of Toronto, I co-developed with Petros Faloutsos a physics-based animation system called DANCE (Dynamic Animation and Control Environment). DANCE could be extended by plug-ins and featured abstract interfaces for controllers, numerical integrators and physical models. It could be used as a physics-simulation playground for testing out a variety of different ideas from virtual stuntmen to biomechanical models of muscle. This was a formative experience for me, as it was this project that I really started building plug-in based frameworks with abstract component interfaces in my software.

Features

DANCE allows different physical models to be combined together, such as multibody rigid dynamics, deformable Lagrangian dynamics, 3-D particle systems, and spring-mass models. Each of these models, can have its own collision models and controllers designed and applied via plug-ins. The visualization is based on OpenGL, but other output formats are supported for different scene description models and rendering libraries.

I am no longer actively working on DANCE. However, DANCE has subsequently been extended and improved by Ari Shapiro and has its own home page at UCLA.

|

|

PlanetExplorer: (for ESPi)

Software demo for 3-D hardware digitizing pen

This project was written with the Windows Presentation Foundation SDK using a combination of C#, XAML, Zam3D, and Microsoft Expression. I was tasked to develop a demo application featuring exploration of our solar system. The interface was done using a wireless 3-D digitizing pen that could track 6 degrees of freedom, so the pen could be used to change the camera view, zoom in, roll, or act as a flashlight to illuminate the planet's surface. I wanted to really see what was possible with WPF. Overall, WPF can be powerful but I felt the language design lacked coherence, as one had to resort to using many different tools and techniques to get things done instead of singular common language or environment.

|

|

NetPeople:

3-D agents for the web

(1998-2000, version 1.0, at Inago Inc. Toronto))

I really enjoyed my experience working on this product. We were in the midst of the dot-com boom in the late 90's and I was the leading software architect for the first version of NetPeople, our attempt to build 3-D agents on the web. The concept was for web pages to have interactive 3-D agent representatives that could directly take queries from a visitor, answer in speech and automatically browse to directed pages, minimizing the effort for a visitor to find and navigate to desired content. This involved the complex integration of 3-D OpenGL graphics, web-based Javascript for agent control of web browsing, text-to-speech and speech recognition technologies. There was a strong sense of urgency and we had to adjust quickly to the demanding pace of development while tackling technical challenges. I had to figure out how to render a real-time OpenGL character as a full-3D character that would walk around the desktop, outside of the browser and without any rectangular window borders (ie., the silhouette of the character was the window border). This was my first substantial software design where we relied heavily on UML modeling methodologies during design sessions. NetPeople is actively being improved in Canada and Japan by Inago Inc. and has been deployed in several different customer platforms in Japan.

|

|

Awviewer:

Viewing and data translation for Direct3D content

(1997-1998 at Alias|wavefront - now Autodesk)

I've done some work through my consulting company, Digital Monkeys Computer Graphics Inc. for Alias|wavefront's PowerAnimator product. I was part of the Games Team, making data translation tools for geomtetry, joint and mesh animation and lighting for Microsoft's Direct3D file format. I also had to create my own Direct3D viewer to test the fidelity of exported content. This was my first commercial software project and introduced me to the DirectDraw and Direct3D APIs which were just released at the time. Some challenges I had to figure out were right-hand to left-hand coordinate system conversion, animation keyframe compression, whole mesh animation, and exporting nonlinear animation sequences. I also had to reverse engineer the Direct3D binary file format since there was no documented file format available at the time.

|